I live a few miles away from a rapidly growing settlement called Brough. I see it mentioned from time to time as a town in the local press, but I've always thought of it as a large village. It has been a settlement for a long time, it was a town, Peturia, when Rome ruled this area.

The idea of not knowing whether a place in England is a town or a village seems easy to resolve: does it have a town council or a parish council? Well in the case of Brough it is not quite so easy. The local council is a town council, but it covers both Elloughton and Brough and is called Elloughton-cum-Brough Town Council. It is not that uncommon to find a civil parish or town council with multiple settlements within its bounds, but the council is a town council, surely one of the settlements needs to be a town.

It is clear to me there are two settlements here. Road signs show both places sometimes in different directions, OS Locator shows the street names as streetname : Brough : Elloughton-cum-Brough and streetname : Elloughton : Elloughton-cum-Brough.

OS Open Names shows both places as populatedPlace: village. So is it possible to have a town council presiding over an area with two villages and no towns in it?

I've asked the Local Authority, who, after all, provide the data for OS Locator and OS Open Names, what they think.

The answer is a political hot potato at the moment, as Elloughton-cum-Brough town council have recently decided to spend £4000 on a mayoral-style chain for the head of the council. It seems he can legitimately call himself mayor after the town council made the appropriate change in 2011. That's a lot of money to spend on what some call trinkets at a time when there are council cuts elsewhere.

Edit:

The clerk to the Elloughton-cum-Brough has contacted me and kindly given me information about the process that resulted in the parish council becoming a town council. She also confirmed that both Brough and Elloughton are villages. That means that the town council area has two villages and no towns in it.

Friday, 27 November 2015

Monday, 28 September 2015

Extracting building heights from LIDAR

The UK Environment Agency have released some LIDAR data in Digital Elevation Model (DEM) format. It includes Digital Terrain Model (DTM) data and Digital Surface Model data. The DSM data includes buildings and trees while the DTM is processed to remove these so the underlying terrain is visible. Tim Waters asked if you subtract the DTM from the DSM would you be left with just building and tree heights? I'd started to look at this and it turns out building heights are extractable in this way.

I have written a script to do the subtraction and create both the difference file and an SQL file to load the data into a postgresql table. I created a hill shading image from one of the difference files to see what features have been stripped from the DTM data. Here's a jpg version of it:

You can see that the buildings and some other features are all well defined. Phil Endecott suggested using the DSM data to create building outlines which could be traced in OSM. His images look good. I would suggest starting his process with this difference data as all the terrain detail has been removed so it may be even better.

Once the SQL file of height data has been loaded into a Postgresql database, which has the PostGIS extension installed, we can then do some queries on it. I selected some OSM building polygons in the range of the loaded data and found which of the height points fell within each polygon. The highest of these is the highest point of the building above the surrounding terrain. I've written a script as a place-holder to extract all the heights for a rectangular area. Someone could extend this to make a file to upload to OSM to add the heights for every building in the defined area. I feel this is clearly an import and so the usual OSM import process needs to be gone through before the import takes place.

I think there is real merit in using this data to extract building heights, which are needed for the 3D images of city buildings.

The two scripts are available here: http://www.raggedred.net/shared/heights.zip The first (s-t.py) needs matching DSM and DTM files and outputs a difference file and the SQL to load into a database table. This will work for any of the resolutions published by the EA. The second, much less polished, script (match.py) defines a rectangle, extracts the OSM buildings for that rectangle and then finds the height data for each building. I wrote it as a script so it can be extended to create a data file for processing or uploading or so overlay tiles could be made from it. I loaded some OSM data with osm2pgsql (which would normally be used for rendering) and added the table for the heights data to the database. The SQL for the table is:

and are whatever you used. The table name eadata and field names are hard-coded in s-t.py.

It is important to say that I would not use a rendering database to create the upload data from as some fields will be missing. osm2pgsql is a lossy process. You can use the OSM ID to extract a current version from the API or from Overpass to add the height data to. I used the rendering data for convenience as I already had it available and to satisfy myself that the process works.

I hope this is useful to someone. Please feel free to ask for more information if I've not made anything clear.

I have written a script to do the subtraction and create both the difference file and an SQL file to load the data into a postgresql table. I created a hill shading image from one of the difference files to see what features have been stripped from the DTM data. Here's a jpg version of it:

You can see that the buildings and some other features are all well defined. Phil Endecott suggested using the DSM data to create building outlines which could be traced in OSM. His images look good. I would suggest starting his process with this difference data as all the terrain detail has been removed so it may be even better.

Once the SQL file of height data has been loaded into a Postgresql database, which has the PostGIS extension installed, we can then do some queries on it. I selected some OSM building polygons in the range of the loaded data and found which of the height points fell within each polygon. The highest of these is the highest point of the building above the surrounding terrain. I've written a script as a place-holder to extract all the heights for a rectangular area. Someone could extend this to make a file to upload to OSM to add the heights for every building in the defined area. I feel this is clearly an import and so the usual OSM import process needs to be gone through before the import takes place.

I think there is real merit in using this data to extract building heights, which are needed for the 3D images of city buildings.

The two scripts are available here: http://www.raggedred.net/shared/heights.zip The first (s-t.py) needs matching DSM and DTM files and outputs a difference file and the SQL to load into a database table. This will work for any of the resolutions published by the EA. The second, much less polished, script (match.py) defines a rectangle, extracts the OSM buildings for that rectangle and then finds the height data for each building. I wrote it as a script so it can be extended to create a data file for processing or uploading or so overlay tiles could be made from it. I loaded some OSM data with osm2pgsql (which would normally be used for rendering) and added the table for the heights data to the database. The SQL for the table is:

The output SQL data can be loaded into this with the command:

CREATE TABLE eadata

(

hid serial NOT NULL,

height double precision,

locn geometry(Point,27700),

CONSTRAINT eaheight_prim PRIMARY KEY (hid)

)

WITH (

OIDS=FALSE

);

CREATE INDEX eadata_index

ON eadata

USING gist

(locn);

psqlwhere-f

It is important to say that I would not use a rendering database to create the upload data from as some fields will be missing. osm2pgsql is a lossy process. You can use the OSM ID to extract a current version from the API or from Overpass to add the height data to. I used the rendering data for convenience as I already had it available and to satisfy myself that the process works.

I hope this is useful to someone. Please feel free to ask for more information if I've not made anything clear.

Sunday, 20 September 2015

More LIDAR goodness

I looked into LIDAR data from the UK Environment Agency some weeks ago. I needed it help a local group who are investigating flood mitigation options. The data was listed as being about £26,500 but we got a 100% discount if we used it for restricted, research use, so we could afford it! A few weeks after I'd used the height data for the group I got an email from the Environment Agency. They said the data was being made available as Open Data under the Open Government Licence. So now I could use it for any other purposes at no cost. You can get the data from http://environment.data.gov.uk/ds/survey

I decided to make a detailed relief map of part of the area close to home. The data doesn't cover the whole country, only parts that are deemed at risk of flooding. All of Hull and the river Hull catchment area are included in this. I've only looked at my local area so other areas may vary.

The data is downloaded as Ordnance Survey 10km grid tiles. There are 2m, 1m, 50cm and 25cm options and digital terrain model and digital surface model options too, so let's look at these options, but first a bit about LIDAR.

LIDAR is a technology that uses laser light to measure a distance repeatedly over an area to create a 3D model of an area. If the LIDAR transceiver is mounted at a fixed point it can pan around to record a very detailed image in 3D of everything that can be seen from that point. It works very well in this way inside a building or a cave to make a very accurate model. The US Space Shuttle flew a mission to use a variant of LIDAR to record the height of the surface of the Earth from space. This is available as SRTM.

More recently LIDAR equipment has been flown in aircraft. The difficulty of making useful measurements from an aircraft should not be underestimated. The only data LIDAR returns is distance to the target, so knowing PRECISELY where the aircraft is in 3D is the real problem. GPS is hopeless at altitude measurement and scarcely good enough for lateral location, barometric height measurements vary over time and location and inertial dead-reckoning accuracy falls off with time. A combination of all of these plus post-processing can result in useful data.

The Environment Agency LIDAR distance options specify the distance between the sample points, the 2m option having less detail than the 25cm option. The area that these options cover varies with the highest detail covering the smallest area. I chose the 50cm option as it covered the area I wanted at the highest level of detail. The detail does make for larger datasets and more processing needed to do anything with it.

Clearly the LIDAR measures the distance to the first object it encounters from the aircraft, so it measures tree tops, building roofs and even vehicles. This is known as the digital surface model. This is often a composite from multiple images, as this data is, to compensate for location inaccuracies and to help remove things like vehicles. To get a useful model of the real landscape, without trees and buildings, the data is post processed to create the digital terrain model. This is the data I have used.

The OGL data was different from the data the Environment Agency originally supplied. The original data was in smaller grid squares and the height was rounded to the nearest centimetre. The OGL data is in bigger squares which makes it a bit easier to process but seems to use 18 decimal places of a metre, which is smaller than the diameter of an atom.

I wanted to create a relief map and make contours from the data and, not for the first time, GDAL had the tools. The data uses the UK Ordnance Survey projection, known as OSGB36 or ESPG:27700, so to use any OSM data with it I would need to reproject to WGS84 or EPSG:4326.

To make a relief map I used gdaldem with the hillshade option on each of the datafiles. These need to be joined together to make a larger image, so the option -compute-edges is also needed. The complete command is:

The next step is to use gdalwarp to reproject the large tiff file to one in the WGS84 projection. The command describes the source and target projection and filenames. There are significant missing pieces in the large TIFF as the available data was not rectangular. The -srcnodata 0 and -dstalpha makes missing data transparent rather than black.

Another way to visualise the LIDAR data is contours. I decided to create a set of overlay tiles that are transparent except for the contours. These can have a different density of contours at each zoom level. I chose the smallest contour step to be shown at the highest zoom level to be 0.2 metres. The GDAL tool for the job is gdal_contour which makes a shapefile with a linestring for each contour. The command is

You can see the results at http://relief.raggedred.net. I added a water overlay and a roads overlay (thanks to MapQuest for the roads) to help position the relief imagery.

I decided to make a detailed relief map of part of the area close to home. The data doesn't cover the whole country, only parts that are deemed at risk of flooding. All of Hull and the river Hull catchment area are included in this. I've only looked at my local area so other areas may vary.

The data is downloaded as Ordnance Survey 10km grid tiles. There are 2m, 1m, 50cm and 25cm options and digital terrain model and digital surface model options too, so let's look at these options, but first a bit about LIDAR.

LIDAR is a technology that uses laser light to measure a distance repeatedly over an area to create a 3D model of an area. If the LIDAR transceiver is mounted at a fixed point it can pan around to record a very detailed image in 3D of everything that can be seen from that point. It works very well in this way inside a building or a cave to make a very accurate model. The US Space Shuttle flew a mission to use a variant of LIDAR to record the height of the surface of the Earth from space. This is available as SRTM.

More recently LIDAR equipment has been flown in aircraft. The difficulty of making useful measurements from an aircraft should not be underestimated. The only data LIDAR returns is distance to the target, so knowing PRECISELY where the aircraft is in 3D is the real problem. GPS is hopeless at altitude measurement and scarcely good enough for lateral location, barometric height measurements vary over time and location and inertial dead-reckoning accuracy falls off with time. A combination of all of these plus post-processing can result in useful data.

The Environment Agency LIDAR distance options specify the distance between the sample points, the 2m option having less detail than the 25cm option. The area that these options cover varies with the highest detail covering the smallest area. I chose the 50cm option as it covered the area I wanted at the highest level of detail. The detail does make for larger datasets and more processing needed to do anything with it.

Clearly the LIDAR measures the distance to the first object it encounters from the aircraft, so it measures tree tops, building roofs and even vehicles. This is known as the digital surface model. This is often a composite from multiple images, as this data is, to compensate for location inaccuracies and to help remove things like vehicles. To get a useful model of the real landscape, without trees and buildings, the data is post processed to create the digital terrain model. This is the data I have used.

The OGL data was different from the data the Environment Agency originally supplied. The original data was in smaller grid squares and the height was rounded to the nearest centimetre. The OGL data is in bigger squares which makes it a bit easier to process but seems to use 18 decimal places of a metre, which is smaller than the diameter of an atom.

I wanted to create a relief map and make contours from the data and, not for the first time, GDAL had the tools. The data uses the UK Ordnance Survey projection, known as OSGB36 or ESPG:27700, so to use any OSM data with it I would need to reproject to WGS84 or EPSG:4326.

To make a relief map I used gdaldem with the hillshade option on each of the datafiles. These need to be joined together to make a larger image, so the option -compute-edges is also needed. The complete command is:

gdaldem hillshade -compute_edges infile relieftiff/outfile.tifThe output is geoTIFF files which can be merged into a single (large) geoTIFF with the command

gdal_merge.py -o big.tif *.tifThis creates a geoTIFF file which has the image of the relief in a TIFF image and also has the locations of the edges in the original OS projection.

The next step is to use gdalwarp to reproject the large tiff file to one in the WGS84 projection. The command describes the source and target projection and filenames. There are significant missing pieces in the large TIFF as the available data was not rectangular. The -srcnodata 0 and -dstalpha makes missing data transparent rather than black.

gdalwarp -s_srs epsg:27700 -t_srs epsg:4326 -srcnodata 0 -dstalpha big.tif bigr.tifThe new TIFF file is what we want to see, but now it needs turning into tiles to be displayed on a slippy map. I decided that zoom level 13 to 18 would give a useful display. To make these tiles I used gdal2tiles.py, specifying the reprojected TIFF image, the zoom levels and the folder to put the tiles into.

gdal2tiles.py -z13-19 bigrt.tif tilesThis makes a set of tiles in the TMS format in the specified folder, in this case tiles.

Another way to visualise the LIDAR data is contours. I decided to create a set of overlay tiles that are transparent except for the contours. These can have a different density of contours at each zoom level. I chose the smallest contour step to be shown at the highest zoom level to be 0.2 metres. The GDAL tool for the job is gdal_contour which makes a shapefile with a linestring for each contour. The command is

gdal_contour -i 0.2 -a height infile outshapefile.shpThe resulting shapefile needs to be reprojected to WGS84. The tool to reproject shapefiles is ogr2ogr

ogr2ogr -t_srs epsg:4326 -s_srs epsg:27700 outshape.shp new.shpI decided to use Mapnik to make the contour overlay tiles. Mapnik can use shapefiles but specifying the long list of shapefiles created above would be a problem so I loaded the shapefiles into a postgresql table in a database with PostGIS enabled. Postgresql comes with shp2pgsql to do this:

shp2pgsql -a -g way new.shp eacontours > new.sqlThis makes SQL to load the shapefile into eacontours table, putting the geometry in the field called way. To load this into a database called ukcontours which already has the postgis extension installed the command is

psql -d ukcontours -f new.sqlI then designed the overlay with Tilemill to create the transparent tiles with more contours at higher zoom levels.

You can see the results at http://relief.raggedred.net. I added a water overlay and a roads overlay (thanks to MapQuest for the roads) to help position the relief imagery.

Tuesday, 11 August 2015

Strapped

Hull is a low-lying city, as are some of the towns and villages that surround it. I have blogged before about the serious flooding in the area in 2007, just before I started mapping in OSM. What a lot of people won't know, even locals, is that flooding is a regular occurrence in some parts of the area. This flooding is on a much smaller scale than happened in 2007, but it still causes misery for anyone whose house is flooded. The Cottingham Flood Action Group have tried to understand what causes this regular flooding and then campaign to fix the causes. I have helped a bit by providing maps and overlaying data from various sources. Some of these sources are not open so I don't want to publish them here.

One source of contention is riparian ownership. This can apply when a water course runs through your land and you may then be required to maintain it. For example a ditch carrying surface water away may need to be cleaned out so water can actually flow in the ditch and not back up causing a flood elsewhere.

The East Riding of Yorkshire council is strapped for cash. They are pushing people to maintain ditches and in some cases ditches in culverts to prevent flooding, claiming these people are riparian owners of the ditches. To be a riparian owner the watercourse must be wholly within the property or form the boundary of the property. If the watercourse forms a boundary, then the property owner owns the watercourse to the mid line.

I have matched the Land Registry Inspire dataset to my surveys of a few of these and it clearly shows that the ditch is many metres outside of the privately owned land and well within the area of the public highway. This means that no matter how strapped the council is for cash, they must maintain the ditch or culvert. Of course, my survey probably isn't enough to convince the council, but it certainly should prompt people to get more evidence.

Don't believe your council. If they claim you are responsible for something unusual, make them prove it and make sure you get enough facts to stand up to them. The council may be right, but they be just trying to get you to pay for something they are responsible for.

One source of contention is riparian ownership. This can apply when a water course runs through your land and you may then be required to maintain it. For example a ditch carrying surface water away may need to be cleaned out so water can actually flow in the ditch and not back up causing a flood elsewhere.

The East Riding of Yorkshire council is strapped for cash. They are pushing people to maintain ditches and in some cases ditches in culverts to prevent flooding, claiming these people are riparian owners of the ditches. To be a riparian owner the watercourse must be wholly within the property or form the boundary of the property. If the watercourse forms a boundary, then the property owner owns the watercourse to the mid line.

I have matched the Land Registry Inspire dataset to my surveys of a few of these and it clearly shows that the ditch is many metres outside of the privately owned land and well within the area of the public highway. This means that no matter how strapped the council is for cash, they must maintain the ditch or culvert. Of course, my survey probably isn't enough to convince the council, but it certainly should prompt people to get more evidence.

Don't believe your council. If they claim you are responsible for something unusual, make them prove it and make sure you get enough facts to stand up to them. The council may be right, but they be just trying to get you to pay for something they are responsible for.

Thursday, 25 June 2015

Lidar

Today I received two DVDs with Lidar data on them. They cover Hull and some of the surrounding area data showing centimetre height records at 50cm spacing. It shows DTM, so no buildings or trees, though DSM is available too.

I've just finished making a replacement window for the garage - the old one was completely rotten - so I fitted it this afternoon while the weather was fine. Now that's done I can turn to this detailed Lidar data.

I've just finished making a replacement window for the garage - the old one was completely rotten - so I fitted it this afternoon while the weather was fine. Now that's done I can turn to this detailed Lidar data.

Saturday, 6 June 2015

When does a postcode start?

I have mapped a fairly large area, Hull and most of East Yorkshire. When there was nothing on the map I just added what I found but now there is a fairly complete road network. Keeping that up to date can be hard work - how do you know what has been added since the last time I looked? OS Locator is really valuable for this as new roads appear on there as OS maps them. It would be better to know developments are starting and get in early and I think there may be a way: postcodes.

New postcodes are allocated all the time. Around a thousand are created every month in GB. The Office of National Statistics publish a postcode list based on Codepoint Open data but with some extra stuff in there. One thing is the month the postcode was set up. Looking at the recent ones may let us find places that need surveying.

I've created a map to visualise these: http://pcage.raggedred.net

I hope this proves useful.

New postcodes are allocated all the time. Around a thousand are created every month in GB. The Office of National Statistics publish a postcode list based on Codepoint Open data but with some extra stuff in there. One thing is the month the postcode was set up. Looking at the recent ones may let us find places that need surveying.

I've created a map to visualise these: http://pcage.raggedred.net

I hope this proves useful.

Wednesday, 3 June 2015

New postcode layer

I've just finished extracting the data from a full download of OS Open Names and setting up a layer to see the new postcodes. The postcode data is only a centroid for each postcode area.

I maintain a layer of postcodes from the Office of National Statistics, one from Codepoint Open and now Open Names. The Codepoint Open and ONS postcodes are in the same locations, though Codepoint Open data is a bit more up-to-date right now. The OS Open Names postcode locations are now sub-metre, so I expected that the centroid might be in a slightly different location, but some are substantially different as you can see here.

The Codepoint Open are in red, the OS Open Names are in magenta.

I don't store any of these tiles, I render them on the fly. Real tiles are often stored in a hierarchy of folders in the form of

I also extracted the road name and place name data, reprojected all of the location data from OS grid references to lon and lat and stored them for later use. If anyone wants to see this I'll happily pass it on, just ask.

If you want to see the new postcodes you can see the new layer on oscompare. Make sure you open the layer selection (blue+white +) and select what you want to see. You need to zoom in to see the postcodes.

I maintain a layer of postcodes from the Office of National Statistics, one from Codepoint Open and now Open Names. The Codepoint Open and ONS postcodes are in the same locations, though Codepoint Open data is a bit more up-to-date right now. The OS Open Names postcode locations are now sub-metre, so I expected that the centroid might be in a slightly different location, but some are substantially different as you can see here.

The Codepoint Open are in red, the OS Open Names are in magenta.

I don't store any of these tiles, I render them on the fly. Real tiles are often stored in a hierarchy of folders in the form of

tilename-base/zoom/xposition/yposition.pngSince I don't store any of these, when someone requests a tile they would normally get a "Not found (404)" error. I trap these errors and use the folder hierarchy to extract the postcode centroids from a database for the zoom, x and y area of the tile. This is used to render a tile with a transparent background and centroid markers on it. I do this because I didn't want to use the considerable disk space the real tiles would take up, especially as I create to to zoom level 21 to make editing easier with the layer switched on.

I also extracted the road name and place name data, reprojected all of the location data from OS grid references to lon and lat and stored them for later use. If anyone wants to see this I'll happily pass it on, just ask.

If you want to see the new postcodes you can see the new layer on oscompare. Make sure you open the layer selection (blue+white +) and select what you want to see. You need to zoom in to see the postcodes.

Tuesday, 2 June 2015

Twenty by twenty

It seems that there are not missing sections in the OS Open Names downloads from OS OpenData. The sections are 20x20 km squares.

Monday, 1 June 2015

OS Open Names

As I noted in the previous post Ordnance Survey have said that OS Locator open data is being withdrawn and being replaced by OS Open Names. The file is a strange mixture of three types of data jumbled up. So I needed to separate them into something useful. The three types are postcode centroids, place names and road names. All of the locations are in the projection that Ordnance Survey use, OSGB36 or EPSG:27700. The great thing about the values used in this projection is that they are measured in metres from a fixed point. The previous OS open data, such as OS Locator or CodePoint Open (postcode centroids) use this projection too of course which fixes a location to a square metre. OS Open Names however has a decimal component so the location is now specified to the nearest millimetre - more accurate than OSM works to. The east-west specification in OS parlance is know as eastings and the north-south specification is known as northings. Eastings and northings can be converted to longitude and latitude for use in OSM using, for example, GDAL libraries.

The postcode centroids are very simple records. It needs the postcode and the easting and northing for the location of the centroid. Comparing a few OS Open Names centroids with Codepoint Open records the centroids are in slightly different locations.

OS Locator lists named roads with its name, a centroid and a bounding box for the road. There is also a hierarchy of place, borough, county or unitary authority. All of this is in OS Open Names and the increased millimetre accuracy is there too.

I've not used the gazetteer of place names, but the name and location data are available as you would expect.

All of the data types have some URIs in the files too for many of the fields. Many that I have tried to open are dead links, but some show the hierarchy of data. I'm not sure why this is useful.

I think I'm going to write a routine to unzip and process all of the OS Open Names data. I'll load the data into a database, reproject it for OSM and make the processed data available if anyone wants it.

First, however, OS need to supply the missing sections of their open data.

The postcode centroids are very simple records. It needs the postcode and the easting and northing for the location of the centroid. Comparing a few OS Open Names centroids with Codepoint Open records the centroids are in slightly different locations.

OS Locator lists named roads with its name, a centroid and a bounding box for the road. There is also a hierarchy of place, borough, county or unitary authority. All of this is in OS Open Names and the increased millimetre accuracy is there too.

I've not used the gazetteer of place names, but the name and location data are available as you would expect.

All of the data types have some URIs in the files too for many of the fields. Many that I have tried to open are dead links, but some show the hierarchy of data. I'm not sure why this is useful.

I think I'm going to write a routine to unzip and process all of the OS Open Names data. I'll load the data into a database, reproject it for OSM and make the processed data available if anyone wants it.

First, however, OS need to supply the missing sections of their open data.

OS Locator is to be withdrawn

I just received an email from Ordnance Survey, telling me that OS Locator, part of the OS Open Data, is to be withdrawn in a year's time. They say that OS Open Names has been published and that replaces OS Locator. They hint that it may replace CodePoint Open too, though there's no notice of withdrawal for that yet. I thought I should look at OS Open Names to see what it offers.

The first thing I saw was that the data is broken into the OS large-scale grid squares, which break the country into a 13x7 grid with 55 squares with actual data in them. Each one of these needs to be downloaded separately to cover GB. That is a pain to start with. I requested SE and TA which are both needed to cover the village I live in. After downloading them the .zip file contains each area broken down into 100 separate sections as I would expect, but I quickly realised that there were only 25 in SE and 10 in TA. Much of TA covers the North Sea, so there should be fewer sections, but clearly many were still missing. I downloaded another area and again the same 75 sections were missing. I emailed customer service at OS to point this out, with no answer yet.

I pressed on to look at the data in the sections that were there. The data is in a CSV format (there was another choice). There is a summary of column headings, but the data is a strange muddle of three types of data. Much of the data is a url to an OS website with the data summarised on a page for each column of each line of the text. It appears that there is a record type for place names, a record type for postcode centroids and a record type for named roads all freely mixed up throughout the file. There doesn't seem to be a field to simply identify what the record type is for each line. The first field is an id field. For place names it seems to start with osgb and has a number after that, for postcodes a postcode type id, with spaces removed, is in the id field and for road names an id that looks like a GUID is in the field. It doesn't seem to be a pattern for why the data is in the order that it is.

I'm going to throw some code together to disentangle these record types and see what useful data is then available. Hopefully we will not have lost anything useful in this process, though it does look as though processing this open data is going to be a bit harder than it used to be.

Anyone would think OS don't want to release open data.

Edit: There are two fields which distinguishes these different record types.

The first thing I saw was that the data is broken into the OS large-scale grid squares, which break the country into a 13x7 grid with 55 squares with actual data in them. Each one of these needs to be downloaded separately to cover GB. That is a pain to start with. I requested SE and TA which are both needed to cover the village I live in. After downloading them the .zip file contains each area broken down into 100 separate sections as I would expect, but I quickly realised that there were only 25 in SE and 10 in TA. Much of TA covers the North Sea, so there should be fewer sections, but clearly many were still missing. I downloaded another area and again the same 75 sections were missing. I emailed customer service at OS to point this out, with no answer yet.

I pressed on to look at the data in the sections that were there. The data is in a CSV format (there was another choice). There is a summary of column headings, but the data is a strange muddle of three types of data. Much of the data is a url to an OS website with the data summarised on a page for each column of each line of the text. It appears that there is a record type for place names, a record type for postcode centroids and a record type for named roads all freely mixed up throughout the file. There doesn't seem to be a field to simply identify what the record type is for each line. The first field is an id field. For place names it seems to start with osgb and has a number after that, for postcodes a postcode type id, with spaces removed, is in the id field and for road names an id that looks like a GUID is in the field. It doesn't seem to be a pattern for why the data is in the order that it is.

I'm going to throw some code together to disentangle these record types and see what useful data is then available. Hopefully we will not have lost anything useful in this process, though it does look as though processing this open data is going to be a bit harder than it used to be.

Anyone would think OS don't want to release open data.

Edit: There are two fields which distinguishes these different record types.

Road Closure

The road I live on is closed. A house needs some work done on it. The house wall is tight against the road and that part of the road is also narrow. Scaffolding to work on the house now stands to the middle of the road, so the road is closed to vehicles for two weeks

Should I change the road in Openstreetmap to reflect this temporary closure? I have decided not to change it. Anyone trying to use the road in a vehicle will be directed by another route, by the signs. Most are locals so they will know the alternatives anyway. If I make a change to OSM and someone downloads a snapshot of the data with that change in place they may not download a new version for a while and have the break in the road long after it has actually reopened. Anyway the road exists - it's just not accessible to vehicles for a while.

This closure has caused some very poor driving standards to surface. The alternative routes are small residential roads with parked cars and tight and narrow corners. People using these routes seem to need to take out some annoyance with their normal route being closed by roaring through these streets at ludicrous speeds with no intention to give way to anyone else.

The closure was a surprise to me. I saw a sign go up announcing the closure a couple of days before it happened. When I tried to find out why the road was being closed I drew a blank. The council didn't respond to my request for information and searching their website produced nothing at all. A neighbour pointed out that there was a notice in the local newspaper which was reproduced online. Few few people still read the Hull Daily Mail - it has been steadily descending way below mediocrity for many years. The online notice didn't appear in my searches because it is not sensibly indexed. The HDM website is a nightmare to use, with any page constantly bouncing around as adverts and videos randomly pop up and freeze the pages.

Councils are obliged to publish public notices about some things, such as road closures. It's clear to me that publishing in the local newspaper is not a viable way to do this as they no longer reach much of the population. A sensible addition would be publish the notice on the council's website. I what the East Riding of Yorkshire council will respond to my suggestion of this.

Should I change the road in Openstreetmap to reflect this temporary closure? I have decided not to change it. Anyone trying to use the road in a vehicle will be directed by another route, by the signs. Most are locals so they will know the alternatives anyway. If I make a change to OSM and someone downloads a snapshot of the data with that change in place they may not download a new version for a while and have the break in the road long after it has actually reopened. Anyway the road exists - it's just not accessible to vehicles for a while.

This closure has caused some very poor driving standards to surface. The alternative routes are small residential roads with parked cars and tight and narrow corners. People using these routes seem to need to take out some annoyance with their normal route being closed by roaring through these streets at ludicrous speeds with no intention to give way to anyone else.

The closure was a surprise to me. I saw a sign go up announcing the closure a couple of days before it happened. When I tried to find out why the road was being closed I drew a blank. The council didn't respond to my request for information and searching their website produced nothing at all. A neighbour pointed out that there was a notice in the local newspaper which was reproduced online. Few few people still read the Hull Daily Mail - it has been steadily descending way below mediocrity for many years. The online notice didn't appear in my searches because it is not sensibly indexed. The HDM website is a nightmare to use, with any page constantly bouncing around as adverts and videos randomly pop up and freeze the pages.

Councils are obliged to publish public notices about some things, such as road closures. It's clear to me that publishing in the local newspaper is not a viable way to do this as they no longer reach much of the population. A sensible addition would be publish the notice on the council's website. I what the East Riding of Yorkshire council will respond to my suggestion of this.

Thursday, 21 May 2015

Monday, 11 May 2015

Heights & OS

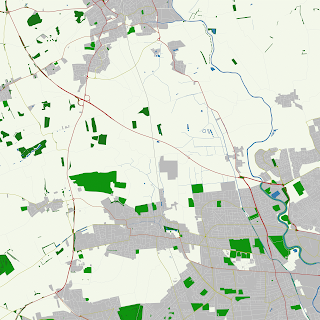

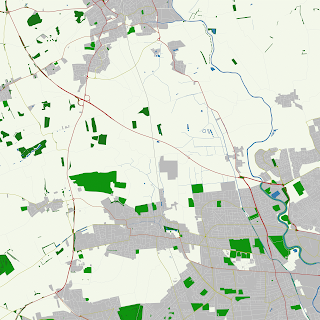

Working with Digital Elevation Models (DEM) is an interesting extension to creating maps, which are usually a flat representation of part of the world. I really want to find a way to show elevation in a way that is a bit different from a flat map. Working with the OS DEM data has whet my appetite to try something new, but first I need a map to work with.

The most detailed DEM data I have is based on Ordnance Survey OpenData, so creating a map in the OS projection will be useful. I use TileMill to create maps from OSM data.

Firstly I needed OSM data in the Ordnance Survey projection. That means loading some OSM data into a fresh PostgreSQL database. I created a PostgreSQL database and, as usual, add the extension for PostGIS. this creates a table called spacial_ref_sys that includes the OS projection, amongst many others. I often add the hstore extension too, but this is a simple map so I didn't need it.

Armed with all of this I could now start TileMill and add the layers I need for the map. Each of the layers, including the coast shapefile, needed a custom projection. This is:

To run the Mapnik XML through Mapnik I used the following python code:

That long-winded projection was needed again. Notice the coordinates in the drawMap function are OS coordinates, not longitude and latitude. Everything must match the chosen projection.

That long-winded projection was needed again. Notice the coordinates in the drawMap function are OS coordinates, not longitude and latitude. Everything must match the chosen projection.

This gives me an image of the map in the OS projection, but the style could be any style you choose, though I'd be wary of copying the OS style too closely. This will now match the DEM data if they are combined. My style is still a bit stark and only renders a few objects, but it is something to work with.

Next I need to use it imaginatively.

The most detailed DEM data I have is based on Ordnance Survey OpenData, so creating a map in the OS projection will be useful. I use TileMill to create maps from OSM data.

Firstly I needed OSM data in the Ordnance Survey projection. That means loading some OSM data into a fresh PostgreSQL database. I created a PostgreSQL database and, as usual, add the extension for PostGIS. this creates a table called spacial_ref_sys that includes the OS projection, amongst many others. I often add the hstore extension too, but this is a simple map so I didn't need it.

createdb -E UTF8 EYOSI loaded an extract of OSM data using the usual osm2pgsql utility except the projection was needed too to convert the data to OS projection as it is loaded.

echo "CREATE EXTENSION postgis;" | psql -d EYOS

osm2pgsql --slim -d EYOS -C 1024 ey.osm.pbf --proj 27700I decided to add a coastline, so that needed to be in OS projection too. OSM coastlines are handled differently from all other data. They are extracted from the main DB, checked for consistency and created into a shapefile for the world. This is known as processed_p.shp. I have my own copy with a cut-down version with only the British Isles in it to make rendering a bit quicker. I reprojected that to a copy in OS projection using OGR2OGR, part of the Geospatial Data Abstraction Library.

ogr2ogr -t_srs 'EPSG:27700' -s_srs 'EPSG:3857' coast_bi_os.shp coast_bi.shp

Armed with all of this I could now start TileMill and add the layers I need for the map. Each of the layers, including the coast shapefile, needed a custom projection. This is:

+proj=tmerc +lat_0=49 +lon_0=-2 +k=0.999601 +x_0=400000 +y_0=-100000 +ellps=airy +units=m +towgs84=446.448,-125.157,542.060,0.1502,0.2470,0.8421,-20.4894 +units=m +nodefsI got this from the PostgreSQL table postgis created above. Once I had designed the map as I wanted it I exported the Mapnik XML and ran it through Mapnik. I discovered that the Mapnik XML was not quite right. It needed to have the third line changed so the srs part matches the custom projection above. There doesn't seem to be a way to set this in TileMill, so a manual edit was needed.

To run the Mapnik XML through Mapnik I used the following python code:

#!/usr/bin/python

# generate a map image in OS projection epsg:27700

import mapnik

import sys, os

def drawMap(filename, west,south,east,north):

print(filename)

sz = 5000

ossrs = "+proj=tmerc +lat_0=49 +lon_0=-2 +k=0.9996012717 +x_0=400000 +y_0=-100000 +ellps=airy +towgs84=446.448,-125.157,542.06,0.1502,0.247,0.8421,-20.4894 +units=m +no_defs"

m = mapnik.Map(sz,sz,ossrs)

mapnik.load_map(m,"osproj.xml")

bbox = mapnik.Envelope(west,south,east,north)

m.zoom_to_box(bbox)

im = mapnik.Image(sz,sz)

mapnik.render(m, im)

view = im.view(0,0,sz,sz) # x,y,width,height

view.save(filename,'png')

if __name__ == '__main__':

drawMap('cott.png',500000,430000,510000,440000)

That long-winded projection was needed again. Notice the coordinates in the drawMap function are OS coordinates, not longitude and latitude. Everything must match the chosen projection.

That long-winded projection was needed again. Notice the coordinates in the drawMap function are OS coordinates, not longitude and latitude. Everything must match the chosen projection.This gives me an image of the map in the OS projection, but the style could be any style you choose, though I'd be wary of copying the OS style too closely. This will now match the DEM data if they are combined. My style is still a bit stark and only renders a few objects, but it is something to work with.

Next I need to use it imaginatively.

Friday, 8 May 2015

Heights

I've been working on something locally for a while that benefits from maps. It needs height information displayed so I thought I'd take a closer look at what was available, especially Digital Elevation Model (DEM) data

OSM doesn't hold much height information, so when people want to display heights they turn to outside information. One such source is Shuttle Radar Topography Mission data or SRTM. One Space Shuttle mission flew around the world and mapped the heights of the ground below using radar. This data has been published as open data. It is 1 second of arc data points for the USA and 3 seconds of arc data points for the rest of the world. This gives a height data point about every 90m for the UK. There are issues with this data with some places having voids where the radar return didn't register. It is usual for people who use this with OSM to render this data as contour lines or as hill shading or both as a way of visualising the height. I thought I'd do some simple processing to be sure I understood the data format.

The SRTM is published as a 1° square. I read the height values and displayed them as a shade of green since human eyes can distinguish more share variations in green than any other colour. Any voids I show as black There were nine pixels in this square) and any value with a small negative value (small so not a void) I show as blue. There's a lot of interest in this which I'd not noticed looking at contours. The dark area top left is the Vale of York, the green area top centre is the bottom end of the Yorkshire Wolds You can just make out the Humber estuary just above the centre and to the right. The bright green area bottom right is part of the Lincolnshire Wolds. The valleys with tributaries feeding into the Vale of York are interesting. None of those exist as rivers or streams today, so I expect they are remnants of the retreating ice caps about ten thousand years ago when the ground was still permafrost so any melting water cut river channels. Today the water table is much lower with the chalk of the Wolds allowing water to drain into it.

Next I looked at Ordnance Survey (OS) OpenData. They release height data as contours and spot heights in shape file format and DEM data too. The DEM is 50m spacing and should be free of voids. They use their own projection (EPSG:27700) for all of their data and this works better for the UK for some jobs. OS release some of their data in parcels based on their own grid. I am interested in a section of including Cottingham, a large village west of Hull. The OS square TA03 has Cottingham in the middle of it, so that is helpful.

I created a similar, image from the OS DEM data. I deliberately emphasised the height differences more than the SRTM image. The area is much smaller than the SRTM area but more detailed. bright green on the left is the edge of the Yorkshire Wolds. The blue line is the river Hull which cuts through the middle of the city of Hull. For comparison the bottom of the the blue smudge on the SRTM image is approximately where the OS image is. Again valleys are shown, though this time running west to east. Again they are dry (though very occasionally not which is part of what I'm investigating). I've decided that there is enough detail in the OS area and that it is big enough, perhaps with one more alongside it, to show what I want. so I'll work with that.

More of what to do with it later.

The python code to produce the SRTM image is here:

OSM doesn't hold much height information, so when people want to display heights they turn to outside information. One such source is Shuttle Radar Topography Mission data or SRTM. One Space Shuttle mission flew around the world and mapped the heights of the ground below using radar. This data has been published as open data. It is 1 second of arc data points for the USA and 3 seconds of arc data points for the rest of the world. This gives a height data point about every 90m for the UK. There are issues with this data with some places having voids where the radar return didn't register. It is usual for people who use this with OSM to render this data as contour lines or as hill shading or both as a way of visualising the height. I thought I'd do some simple processing to be sure I understood the data format.

| |

| SRTM |

Next I looked at Ordnance Survey (OS) OpenData. They release height data as contours and spot heights in shape file format and DEM data too. The DEM is 50m spacing and should be free of voids. They use their own projection (EPSG:27700) for all of their data and this works better for the UK for some jobs. OS release some of their data in parcels based on their own grid. I am interested in a section of including Cottingham, a large village west of Hull. The OS square TA03 has Cottingham in the middle of it, so that is helpful.

|

| OS TA03 square |

More of what to do with it later.

The python code to produce the SRTM image is here:

#!/usr/bin/env pythonThe code to produce the OS image is here:

# -*- coding: utf-8 -*-

import struct

from PIL import Image

def getpix(val):

if val == -32768:

return (0,0,0)

if val < 0:

return (0,0,255)

return (0,int(val),0)

if __name__ == "__main__":

top=54

left=5

highest=-5000

lowest=5000

tile = "N53W001.hgt"

#make the new empty (white) image

im = Image.new("RGB", (1201, 1201), "white")

with open(tile, "rb") as f:

#print get_sample(tile, n, e)

# scan through each of the heights in the file and colour a pixel

for n in range(1201):

for e in range(1201):

buf = f.read(2)

hite=struct.unpack('>h', buf)

#print '{0} {1} {2}'.format(n,e,hite[0])

pt=hite[0]

if pt == -32768:

print 'VOID {0} {1} {2}'.format(n,e,pt)

if ptlowest=pt

if pt>highest:

highest=pt

im.putpixel((e,n),getpix(pt))

print 'lowest:{0}, highest:{1}'.format(lowest,highest)

im.save('h.png')

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import struct

from PIL import Image

def getpix(num):

val=round(num)

if val < 0:

return (0,0,255)

return (0,int(val)*3,0)

if __name__ == "__main__":

top=54

left=5

highest=-5000

lowest=5000

osf = "TA03.asc"

#make the new empty (white) image

im = Image.new("RGB", (200, 200), "white")

with open(osf, "rb") as f:

lines=f.readlines()

for i in range(5,205):

s=lines[i].split(' ')

for idx, val in enumerate(s):

im.putpixel((idx,i-5),getpix(float(val)))

im.save('o.png')

Monday, 16 February 2015

Drone deliveries

The US FAA has ruled that drones need to remain within sight of the operator. This is a major obstacle to companies planning deliveries in the US by drone. I had wondered what kind of mapping such an enterprise would need. Where would such a delivery be made? If the address has a garden then that might be useful, but what if there are plants or garden furniture in the way? If the address has a driveway then that could be a good landing site, but if the delivery sits on the driveway what's to stop it being stolen or driven over by car arriving? If the address is an apartment on a street front will the parcel just be dumped on the street? How could a drone safely land on a sidewalk?

When a delivery is made by hand it is handed over at a doorway, posted into a mailbox or left by a thinking person in a suitable place. A person can gain access to places like a shared lobby too. Can a drone do any of this?

Maybe would-be delivery addresses need to designate a landing site for drones, maybe that would need signage and access restrictions and possibly even extra insurance.

The more I think about it the mapping needed to control this would be very detailed and very specific. I suspect that the FAA ruling is the least of the problems this idea has.

When a delivery is made by hand it is handed over at a doorway, posted into a mailbox or left by a thinking person in a suitable place. A person can gain access to places like a shared lobby too. Can a drone do any of this?

Maybe would-be delivery addresses need to designate a landing site for drones, maybe that would need signage and access restrictions and possibly even extra insurance.

The more I think about it the mapping needed to control this would be very detailed and very specific. I suspect that the FAA ruling is the least of the problems this idea has.

Subscribe to:

Posts (Atom)